Evolution of Search Engines Processes and Components

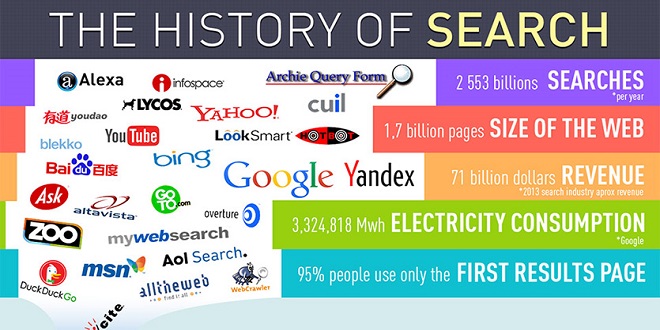

The concept of a search engine was laid out a long time ago. In 1990, the first search engine was released. The founders named it Archie ( archive without a v ). The following year, Veronica and Jughead were released. 1993 saw the launch of Excite and World Wide Web Wanderer . In the same year, Aliweb and Primitive Web Search were launched. Infoseek was a venture where webmasters could submit their pages in a real-time scenario.

However, the game changer was AltaVista: it was the first engine that had unlimited bandwidth and could understand natural-language queries. The following years saw the launch of WebCrawler, Yahoo! Web Directory, Lycos, LookSmart, and Inktomi.

Google founders Larry Page and Sergey Brin created the BackRub search engine, which focused on backlinks. Unlike other search engines, which focused on keyword relevance, the Page Rank algorithm in BackRub used backlinks to determine higher rankings in search results. Later, Page and Brin changed the name of the search engine to Google and paved the way for the search engine revolution.

Web Crawling

Web crawlers or web spiders are internet bots that help search engines update their content or index of the web content of various websites. They visit websites on a list of URLs (also called seeds ) and copy all the hyperlinks on those sites. Due to the vast amount of content available on the Web, crawlers do not usually scan everything on a web page; rather, they download portions of web pages and usually target pages that are popular, relevant, and have quality links. Some spiders normalize the URLs and store them in a predefined format to avoid duplicate content.

Because SEO prioritizes content that is fresh and updated frequently, some crawlers visit pages where content is updated on a regular basis. Other crawlers are defined such that they revisit all pages regardless of changes in content. It depends on the way the algorithms are written. If a crawler is archiving websites, it preserves web pages as snapshots or cached copies.

Crawlers identify themselves to web servers. This identification process is required, and website administrators can provide complete or limited access by defining a robots.txt file that educates the web server about pages that can be indexed as well as pages that should not be accessed.

For example, the home page of a website may be accessible for indexing, but pages involved in transactions—such as payment gateway pages—are not, because they contain sensitive information. Checkout pages also are not indexed, because they do not contain relevant keyword or phrase content, compared to category/ product pages.

Indexing

Indexing methodologies vary from engine to engine. Search-engine owners do not disclose what types of algorithms are used to facilitate information retrieval using indexing. Usually, sorting is done by using forward and inverted indexes.

Forward indexing involves storing a list of words for each document, following an asynchronous system-processing methodology; that is, a forward index is a list of web pages and which words appear on those web pages. On the other hand, inverted indexing involves locating documents that contain the words in a user query; an inverted index is a list of words and which web pages those words appear on.

Search Queries

A user enters a relevant word or a string of words to get information. You can use plain text to start the retrieval process. What the user enters in the search box is called a search query . This section examines the common types of search queries: navigation, informational, and transactional.

Summary

You looked at various types of queries and learned about web directories. In addition to Google, Yahoo!, and Bing, you can use special search engines that are topical and help you search niche information belonging to a specific category.